Your Employees Are Already Using AI

The Case for a Compliance Gateway Between Healthcare Teams and AI Tools

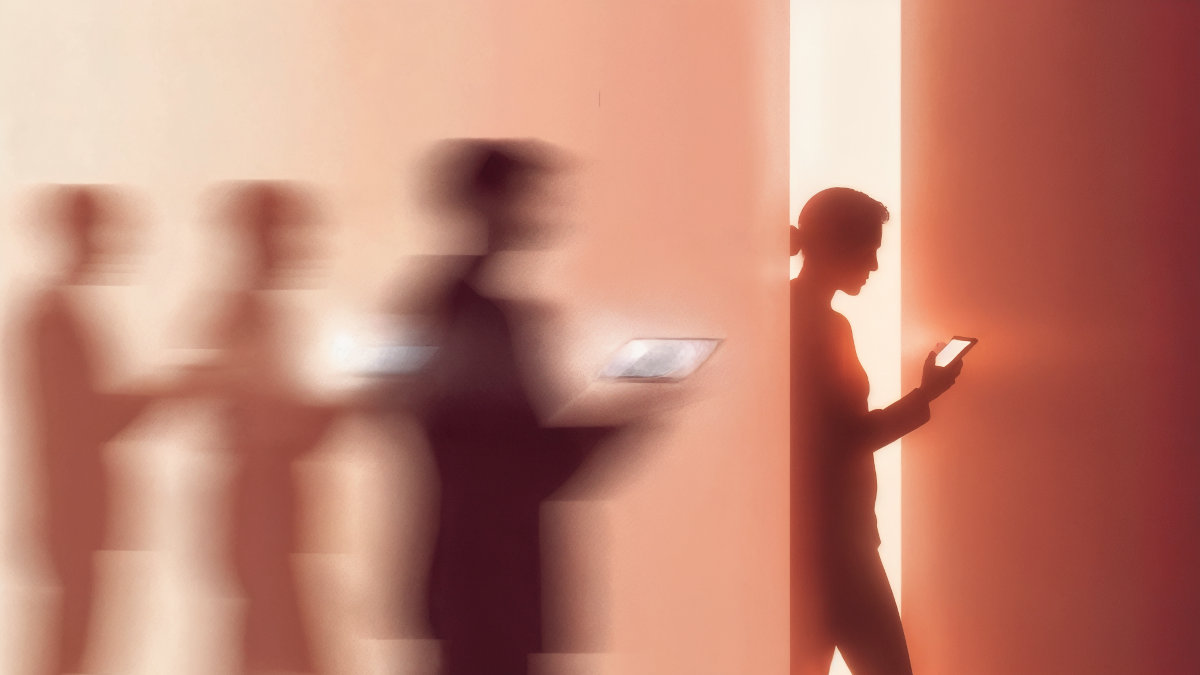

In most healthcare organizations, AI adoption didn't begin with a strategy document or a formal approval process.

It began organically with individuals hearing about how AI could help them do their jobs better, easier. Who doesn't want that? So, they start using AI tools to summarize notes, draft communications, interpret documents, or explore ideas faster. Often with good intentions. Often without visibility.

Whether an organization has formally approved AI usage or not, the reality is the same: AI is already in the workflow.

The real question is no longer if employees are using AI.

It's whether that usage is intentional, governed, and defensible.

Why AI Bans and Policy-Only Approaches Fail

Many organizations respond to AI risk in one of two ways:

- Blanket bans that are difficult to enforce

- Policies that assume perfect compliance without providing safe alternatives

Neither approach reflects how work actually gets done.

When tools are banned outright, usage tends to move underground.

When policies exist without enforcement or structure, teams are left guessing where the boundaries really are.

In both cases, leadership loses visibility causing compliance risk to go up.

The Real Risk: No Boundary at All

The most common AI risk in healthcare isn't malicious behavior or reckless intent.

It's the absence of a clear boundary between sensitive work and external AI systems.

Without that boundary:

- Employees make individual judgment calls about what's safe

- Sensitive data flows inconsistently

- Evidence of proper handling is difficult to reconstruct

- Compliance posture depends on assumptions rather than controls

In regulated environments, invisible usage is often riskier than visible, governed usage.

What a "Compliance Gateway" Actually Means

A compliance gateway is not about surveillance.

It's not about monitoring individuals or second-guessing intent.

A compliance gateway is an organizational control layer that sits between employees and AI systems, making AI usage:

- Intentional - employees know which pathways are approved

- Governed - data handling decisions are explicit, not implicit

- Consistent - similar tasks follow similar rules

- Defensible - usage can be explained under review or audit

Instead of relying solely on policy documents or trust, organizations provide a clear, approved path for AI usage that aligns with regulatory obligations.

Governance Enables AI — It Doesn't Block It

One of the most persistent myths about AI governance is that it slows teams down.

The opposite is often true.

When employees know:

- which tools are allowed

- under what conditions data can be used

- and where sensitive work should happen

They spend less time guessing, self-censoring, or avoiding AI altogether.

Governance, when done well, becomes an enabler.

Why Visibility Matters More Than Perfection

Healthcare compliance is rarely about eliminating risk entirely.

It's about managing risk intentionally.

Organizations that struggle most with AI compliance are often not the ones using AI aggressively. They're the ones who can't see how it's being used at all.

Visibility enables informed decision making, targeted controls, and credible explanations when questions arise.

Without visibility, even well-meaning organizations are forced to rely on hope.

The Direction the Industry Is Moving

As AI becomes embedded in everyday healthcare workflows, governance will increasingly shift:

- bans will become boundaries

- evidence will replace assumptions

- organizational controls will support individual judgement

The organizations that adapt successfully won't be the ones that stopped AI usage.

They'll be the ones that made it explicit, governed, and defensible.

Employees are already bringing AI into healthcare work because the incentives are real and the productivity gains are tangible.

The choice facing organizations isn't whether to allow AI.

It's whether AI usage will happen outside of governance or within it.

About Guardian Health

Guardian Health is being built as a governed AI workbench for healthcare teams: designed to sit between employees and AI systems, making data handling decisions explicit, auditable, and defensible. Our focus is less on controlling behavior, and more on providing clear, compliant pathways for real-world AI usage.